How to Load Large CSVs into HEAVY.AI using SCP and COPY FROM

Download HEAVY.AI Free, a full-featured version available for use at no cost.

GET FREE LICENSE

If you are anything like us, you source your data from just about everywhere.

You have access to internal databases, data warehouses, open sources, external stakeholder data, and flat files on your local machine.

This tutorial will focus on that last item and show you how to load datasets into HEAVY.AI using the Secure Copy Protocol (SCP) and the COPY FROM SQL command.

This method is one of the fastest ways to ingest large flat files, like CSVs, into HEAVY.AI and it only takes a few steps.

Here are the actions we'll cover:

- Find your credentials (e.g., .pem key from AWS)

- Run SCP command to copy the local dataset to your server (like an AWS EC2 instance)

- Copy the dataset from the server's home directory into the directory that HEAVY.AI can 'see'

- Run COPY FROM to import the data into the HEAVY.AI table

Finding Your Credentials

You'll need your server credentials, like a .pem key, to use SCP. Most people store their .pem key file somewhere like ~/.ssh on a Mac.

In this example, our .pem key lives in the home .ssh folder. We can access this directory within Finder using the 'Go to the folder' tool or by navigating to the home directory and pressing 'shift+command+.' to show all hidden directories.

Copy the Local Files to the Server Using the SCP Command

Next, we'll run the SCP command from our local machine's terminal to copy the local files to the remote server.

The SCP command has a few essential parts, which we promise to keep brief. Amazon has some excellent documentation here if you wish to learn more.

Here's what we ran:

Here are the different parts of that command:

- SCP -i calls the command and uses the '-i' for the identity file, which in this case, is our .pem key

- /path/my-key-pair.pem is the path to our .pem key

- SFFind_Neighborhoods.csv is the file we're uploading. We ran this command from within the directory that houses our CSV (you will need the exact path of your file).

- Finally, the my-instance-user-name@my-instance-public-dns-name:~/. tells SCP which server and directory path you'd like to copy the file to directly. In our example, '~/.' is the home directory.

If you have not yet SSH'd into the machine, you may see the following response:

You can type yes and press enter to continue.

In our example, we proceeded with yes and waited for it to upload successfully:

Once the file finished uploading, we SSH'd into our ec2 instance and saw the file in the home directory.

Then we needed to copy the file from the home directory (~) to a directory where HEAVY.AI could see it:

COPY FROM

After the file was in the right place, we could run COPY FROM within the Immerse SQL Editor or omnisql to copy the data into a table.

First, you'd need to create a new table (with the suitable data types) using SQL or the data import tool in the Immerse data manager.

Once the table exists, you could use COPY FROM to copy the data into your destination table.

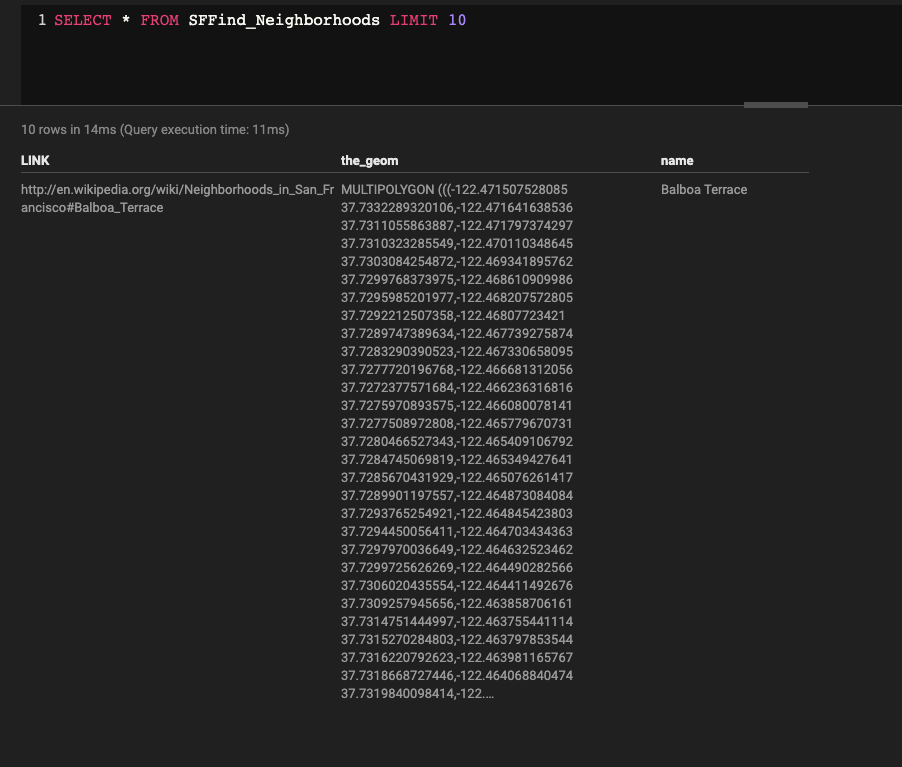

Finally, to check our work, we queried the data we loaded into the table.

Now it's your turn!

There are many ways to create and load tables in HEAVY.AI. Today we showed you one of the fastest ways to ingest large flat files using the Secure Copy Protocol (SCP) and the COPY FROM SQL method.

The video at the top of this post reviews some other ways you can create and load tables. Check it out and learn how to load data using:

Deploy HEAVY.AI and try out this workflow for yourself. If you do, let us know your thoughts through LinkedIn, Twitter, or our Community Forums.